Chain-of-Thought Prompting: It’s off the Chain

A simple "trick" that forces LLMs into thinking through their responses.

Happy Thursday, techno tinkerers,

The people (you) have spoken:

So today we’re looking at chain-of-thought prompting.

Take a deep breath, and let’s read this article step by step.

What is chain-of-thought prompting?

In a nutshell, chain-of-thought (CoT) prompting is any method that nudges a large language model into thinking through its response before answering.

It was first introduced in a 2022 paper called Chain-of-Thought Prompting Elicits Reasoning in Large Language Models.

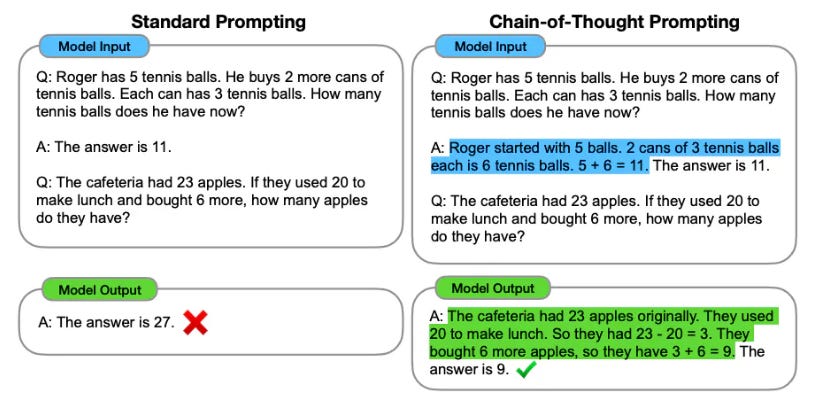

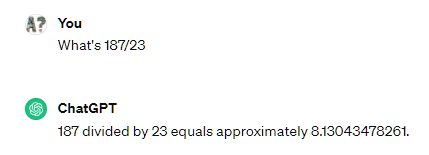

Here’s the first example from it:

Note how the standard prompt shows only the question and answer while the CoT one includes the reasoning steps.

That’s what CoT is all about.

“Chain-of-thought (CoT) prompting is any method that nudges a large language model into thinking through its response before answering.”

But what exactly is the point of it?

Why use chain-of-thought prompting?

There are at least two good reasons you’d want to use CoT prompting in your interactions with LLMs.

1. Improved performance

once compared AI chatbots to interns.And like any overeager intern, an LLM-powered chatbot might be quick to come back with an answer just to make you happy.

The problem is, in some cases, being fast also makes you wrong. It’s easy to make a mistake when you don’t take the time to think through the problem.

And yes: This also applies to large language models.

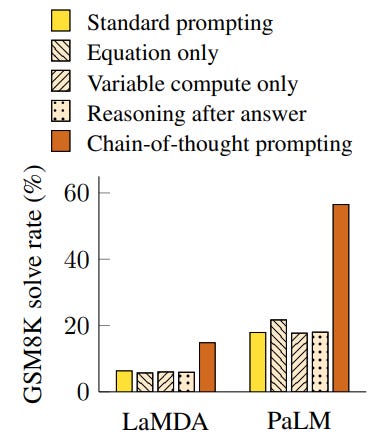

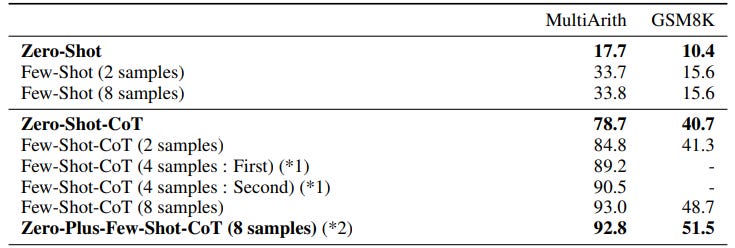

That’s why asking a model to work through the intermediate steps makes it better at certain tasks.1 Chain-of-thought prompting has been shown to dramatically improve different models’ performance on specific LLM benchmarks (GSM8K in the case below):

2. Transparency

The second reason to use CoT is that it gets the model to show its work.

No matter what happens, this is a win-win:

If the final answer is wrong, you’ll be able to identify the exact step where things went off the rails. This lets you adjust your approach and try again.

If the final answer is correct, you’ll have a better understanding of the model’s reasoning steps and the way it works under the hood.

Having said that, you won’t benefit from chain-of-thought prompting in every situation.

When to use CoT prompting?

Just because chain-of-thought prompting is effective, it doesn’t mean it’s always needed. In general, you’ll want to rely on CoT prompts in the following cases.

1. When trying to solve complex problems

For most everyday use cases, you likely won’t need CoT prompting.

If you’re just looking for creative ideas on decorating your kid’s birthday cake, it would be overkill to ask the model to work through every step with scientific precision and treat it like a military operation.

Instead, CoT is especially useful for tasks that require advanced math and commonsense or symbolic reasoning.

2. When using a larger LLM (100B+ parameters)

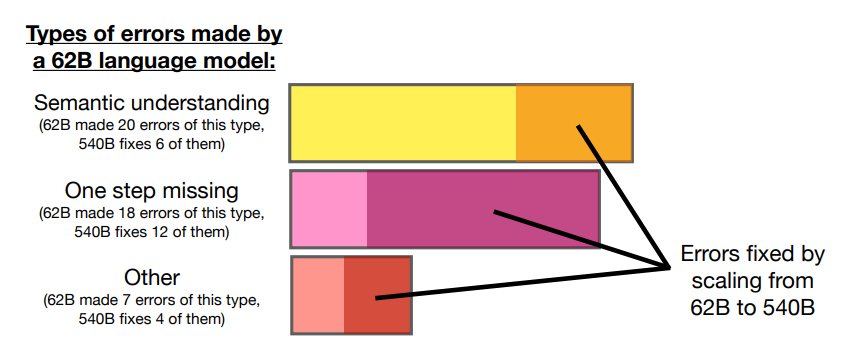

Chain-of-thought prompting isn’t effective with models that have fewer than 100 billion parameters, because:

Smaller models are worse at generating long, consistent chains of thought 2

They’re not able to identify the key steps required to solve difficult problems

For instance, scaling the PaLM model from 62B to 540B has been shown to single-handedly reduce many errors in reasoning and semantic understanding:

As such, PaLM 540B is much better equipped to benefit from chain-of-thought prompting than its smaller cousin.

Here’s a Wikipedia list of LLMs that you can sort by the number of parameters to help you decide whether to use CoT prompting.

Two ways to use CoT prompting

Broadly speaking, you can trigger chain-of-thought reasoning in two primary ways.

They involve using few-shot or zero-shot prompting, which we conveniently covered last week.

1. Few-shot CoT prompting

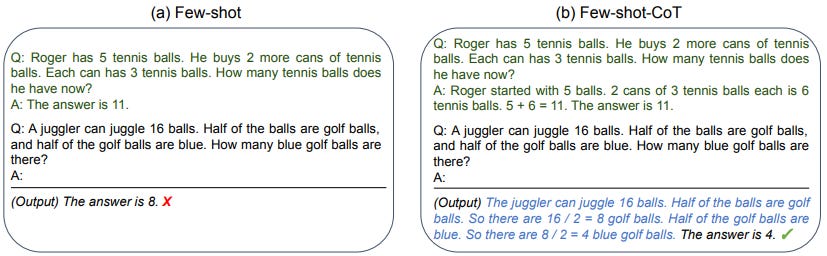

We’ve seen an example of this in the very first image.

Few-shot CoT prompting is all about feeding the model a few worked examples of how to approach a given problem.

Take a look:

Both examples above use a few-shot prompt3 to show the model a sample answer.

But there’s one crucial difference: The first prompt (a) shows the answer itself without the reasoning behind it. The second one (b) includes the steps to arrive at the answer.

Because of this, the model can solve the next problem correctly by following similar steps on its own.

Few-shot prompting is great if you have a question that can be solved through a well-defined, easily showcased approach. You can prime the LLM by including a few similar problems in your few-shot prompt and help it along the way.

But doing this takes some effort, requires a list of worked examples, and assumes you know how to solve similar problems in the first place.

It turns out, for most everyday tasks, there’s a far simpler way…

2. Zero-shot CoT prompting

Zero-shot chain-of-thought prompting is all about triggering the “thinking” process in an LLM without relying on worked examples.

How does it work?

You may want to sit down for this.

All you do is append the following line to your prompt:

“Let's think step by step."

Yup. That’s it!

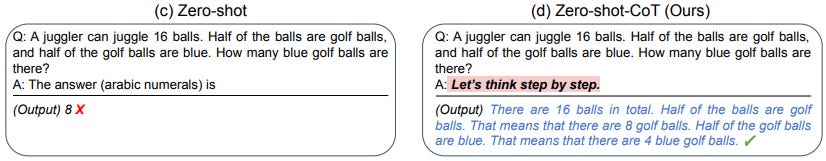

In a paper called Large Language Models are Zero-Shot Reasoners, researchers showed that simply adding that line to a prompt can massively increase an LLM’s performance:

Compared to the baseline Zero-shot prompt, the combined Zero-shot-CoT approach boosts the scores of the tested text-davinci002 model from 17.7 to 78.7 on MultiArith and from 10.4 to 40.7 on GSM8K.4

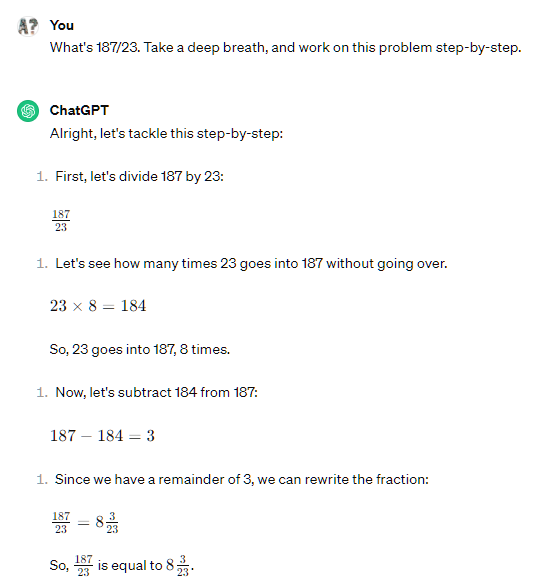

Where Zero-shot fails to elicit the right answer, Zero-shot-CoT often succeeds:

Feeling even more fancy?

You can take things up a notch with this ultra-advanced line instead:

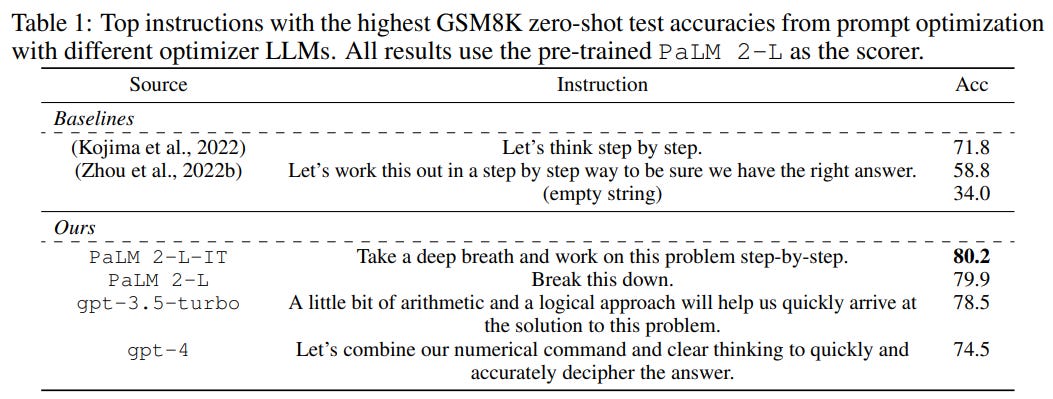

“Take a deep breath, and work on this problem step-by-step.”

I know. It sounds silly. Last I checked, LLMs don’t even have lungs.

And yet…

In another paper—Large Language Models as Optimizers—researchers had different LLMs come up with their own prompts to trigger CoT reasoning. “Take a deep breath and work on this problem step-by-step” ended up being the most impactful phrasing.

So the next time you find an LLM chatbot struggling to help you solve complex problems, simply tell it to breathe deeply and see if it helps.5

As mentioned in the “Transparency” section, there’s another good reason to use zero-shot CoT prompting: Getting an explanation of the model’s reasoning steps.

This is especially helpful in the context of education.

If a student simply asks the model a question, it’ll often just give a short answer:

Adding the “Take a deep breath..” line gets the LLM to show how to arrive at it:

This makes LLMs more useful as tutors.

Summing up

Here’s the TLDR version:

Few-shot CoT: If you have existing examples of how to solve a given problem, use few-shot chain-of-thought prompting to show these to the large language model before asking for a solution.

Zero-shot CoT: If you don’t, simply add “Take a deep breath, and work on this problem step-by-step” to your prompt, nudging the model to think through the intermediate steps.

Over to you…

If you didn’t already use CoT prompting, I hope this helped clarify what it’s about.

Do you already actively apply chain-of-thought prompting? If so, I’d love to hear any observations you’ve made about the process and its impact.

If you have any questions or want to suggest additions, I’m happy to hear from you.

Leave a comment or shoot me an email at whytryai@substack.com.

In the opening example, the model prompted using CoT gives the correct answer for the number of apples in the cafeteria.

In fact, according to the paper, forcing CoT on smaller models may end up reducing their accuracy.

In this specific case, it’s technically a one-shot example.

Few-shot-CoT prompting pushes this even further, but notably, the zero-shot-CoT requires no use of sample problems whatsoever.

There are more advanced variations like automatic CoT prompting and tree-of-thought prompting. But for most of us, the examples in this post are plenty.

Daniel, do you know if CoT prompting is being included in many of the newer or upcoming LLMs? I would guess firms have woken up to this by now.