Gemini 3: Google’s Silent Knockout Punch

Google proves that a "quiet" launch is enough, as long as you bring receipts.

TL;DR

Google launched the world’s best model with all the fanfare of a firmware update for a toaster, but the consensus speaks for itself.

What is it?

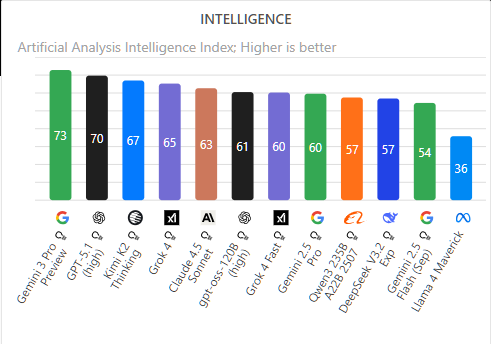

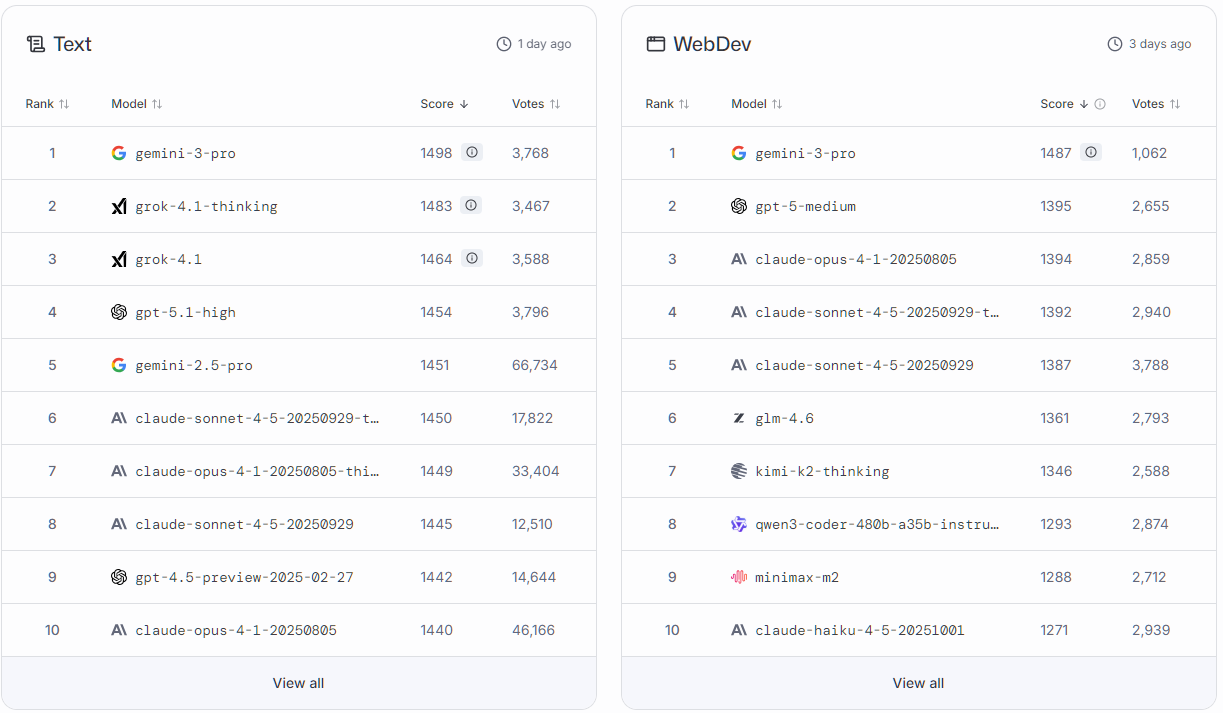

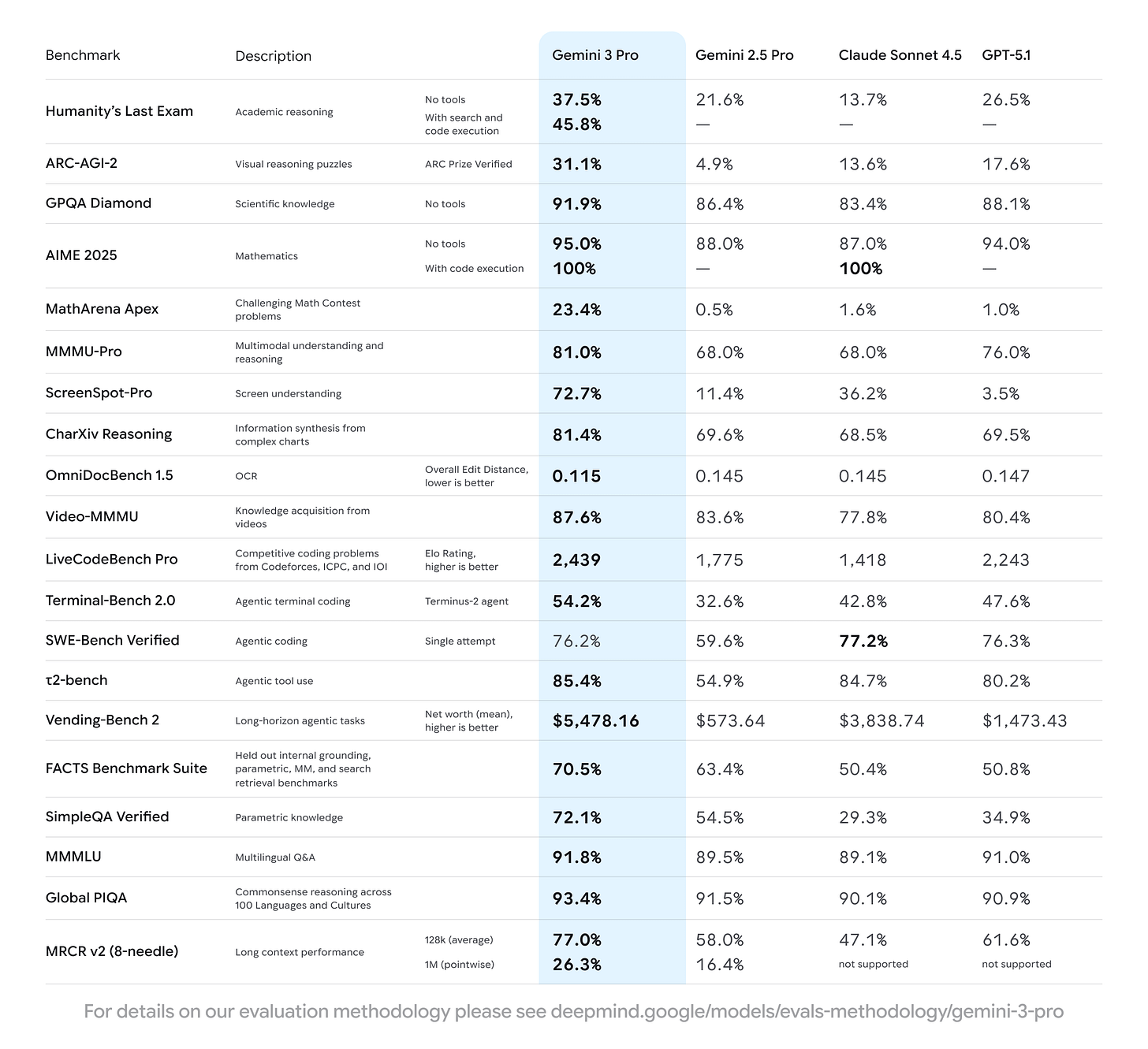

Simply put, Gemini 3 Pro is the best language model by virtually every measure. This isn’t a subjective value judgement. Here are the benchmarks:

Artificial Analysis Intelligence Index tells the same story:

So do the votes from real users on LMArena:

Even the normally hype-averse, high-signal-to-noise channel AI Explained opens with, “For me, [Gemini 3] genuinely marks a new chapter in the race to true artificial intelligence.”:

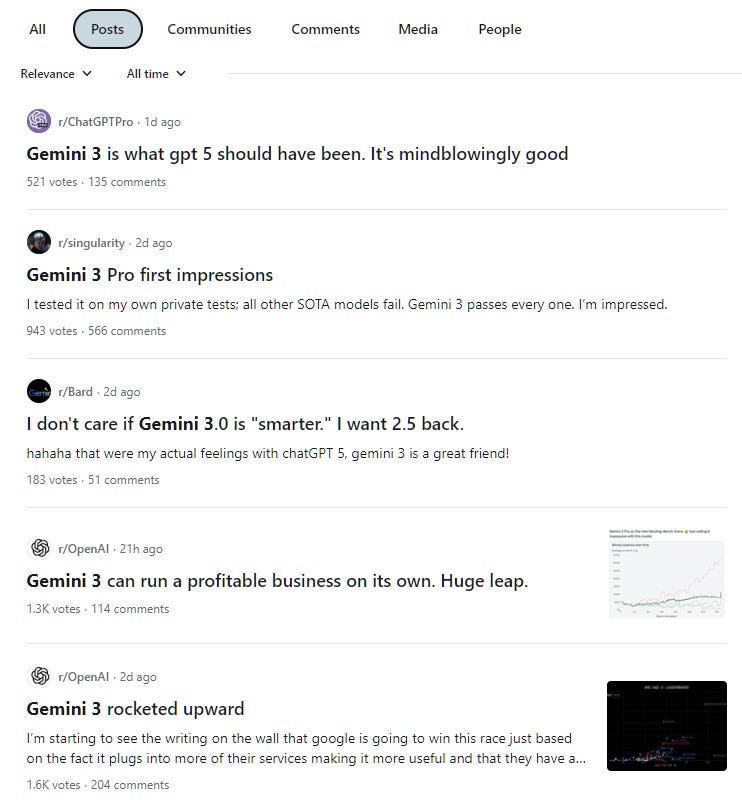

Reddit, typically the first place to surface real-world critical takes, is also largely positive:

I could keep going, but I’m sure I’ve made my point: Gemini 3 is genuinely, provably impressive across the board.

No, it isn’t flawless. Yes, it still hallucinates and makes silly errors. But it’s hard to argue with the fact that Gemini 3 Pro is currently the best model available.

How do you use it?

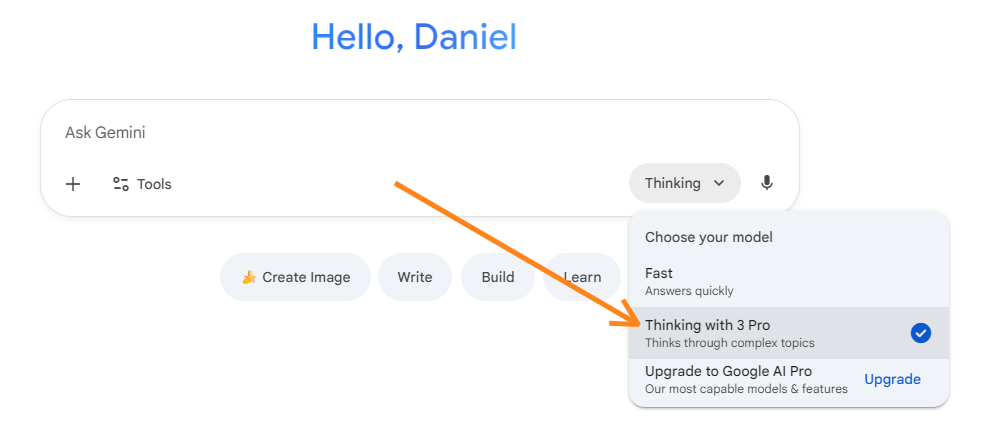

Good news: Gemini 3 Pro has already rolled out to all Gemini app users.

So just head to gemini.google.com and log in with your Google account:

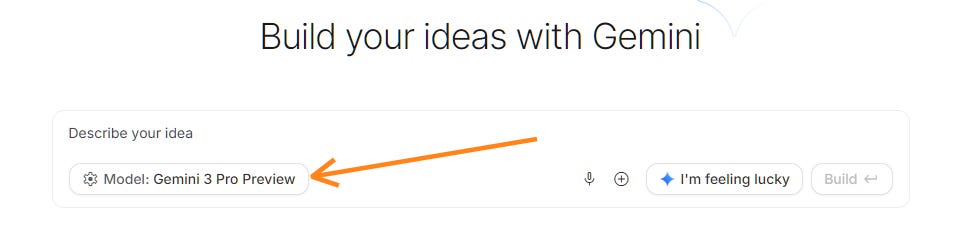

If you hit a message limit or simply prefer using Google AI Studio, you can also try Gemini 3 over at aistudio.google.com:

Go ahead: Ask Gemini 3 to vibe code an app, perform research, review your work, analyze images, or however you prefer to put models through their paces.

Why should you care?

When OpenAI was about to announce GPT-5, the hype couldn’t get any louder.

Sam Altman was teasing the launch for days, culminating with this (in)famous and confusing tweet one day before the livestream:

The livestream itself lasted almost 1.5 hours, with the team showcasing their new model’s capabilities like proud parents parading their child at a beauty pageant:

Many have since speculated that this level of pre-launch publicity and showmanship was a big reason for what ultimately ended up being an underwhelming rollout.

When Gemini 3 launched, Google…quietly published a few text-heavy blog posts.

That’s it.

No flashy livestreams. No presentations. No pre-launch hype cycle.

Not even a single premature Death Star tweet by Sundar Pichai.

As you know, I’ve been railing against excessive hype for as long as this newsletter has existed.

So to me, it’s nice to see a company letting a model’s real-world performance do the heavy lifting without having to hype it up. Google’s approach proves that this path works, as long as your model delivers the goods.

I really wish this were the default in the GenAI space.

Who knows, maybe the launch of Gemini 3 marks the moment we all collectively take a chill pill and start talking about major launches in a calm and measured way.

Right?

Damn it!

Further reading & watching

“Gemini 3 and Google’s Antigravity Trajectory” - AI Ignorance

“Google Gemini 3 Is a Powerhouse” [YouTube] - Theoretically Media

“Google Gemini 3 Is the Best Model Ever. One Score Stands Out Above the Rest” - The Algorithmic Bridge

“Gemini 3 just crushed everything” [YouTube] - AI Search

“Three Years from GPT-3 to Gemini 3” - One Useful Thing

“Vibe Check: Gemini 3 Pro, A Reliable Workhorse With Surprising Flair” - Every

🫵 Over to you…

What are your first impressions of Gemini 3 Pro? How does it compare to your current go-to model? Do you have any practical use cases to share?

Leave a comment or drop me a line at whytryai@substack.com.

Thanks for reading!

If you enjoy my writing, here’s how you can help:

❤️Like this post if it resonates with you.

🔄Share it to help others discover this newsletter.

🗣️Comment below—I love hearing your opinions.

Why Try AI is a passion project, and I’m grateful to those who help keep it going. If you’d like to support my work and unlock cool perks, consider a paid subscription:

Google invented the Transformer what like (does math in head without an AI … thinking for a better answer …) 8 years ago? While I’m not an unfettered fan of the biggest Monopolist in the world, in a way this is their birthright. Their race to lose.

I’ve been going through some editing passes with ChatGPT for a piece tomorrow, trying to improve the flow and I was getting frustrated trying to keep it on task. I didn’t want any language suggestions, just surgical analysis of where the thread might get lost, could use a better transition etc. So, I popped over to Gemini (and tbh this was web so not sure it was even 3.0). Gemini highlighted the one rough spot right away which I worked over and then sent it back to ChatGPT. I told it I was playing Gemini against it but regardless it agreed this rev worked better.

Then of course it offered more. Maybe it wanted to show up Gemini. It went totally off the rails citing text that was not in the piece at all (and as far as I remember not an earlier draft either). I think it got madly jealous.

So I started over in a new chat and then it calmed down.

As I understand it, the model stands out largely because it understands both you and the context better. Is that a fair takeaway? Granted, I need to play with this model a great deal more before I really weigh in.