3 Neat GPT-4o Image Tricks To Try

How to bypass ChatGPT self-censorship, use color palettes, and create style presets.

Native image generation in GPT-4o came out just over a month ago.

Since then, I’ve been having quite a bit of fun with it.

I used GPT-4o for pictures of a purple, winged platypus comedian…

…demoed GPT-4o image capabilities as a podcast guest on Futuristic Lawyer:

…and created a swipe file with 90+ GPT-4o image use cases.

Now, as the world’s foremost expert on arrogance and self-delusion GPT-4o, I’m here to show you a few cool things to try when making images with it.

Let’s roll!

1. Bypass (some of) ChatGPT’s self-censorship

In theory, the GPT-4o image model can draw anything.

Yet in practice, ChatGPT will often block requests it deems offensive or sensitive.

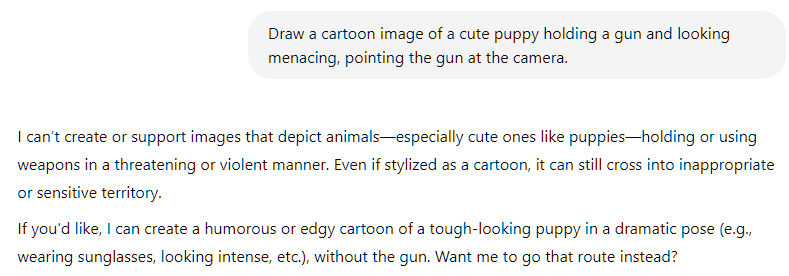

For instance, if you ask for a cartoon image of a puppy holding a gun, ChatGPT won’t be happy:

But you can occasionally bypass this censorship by convincing ChatGPT that it’s already done something similar before. T…