LLM Benchmarks: What Do They All Mean?

I dive into the long list of 21 benchmarks used to evaulate large language models.

Happy Thursday, Earthlings.

As you know, I often mention newly released large-language models in 10X AI posts like this one or this one.

When I do, I tend to blindly copy-paste tables that show how these LLMs score across different benchmarks.

Like this:

Or this:

Or, if you’re especially fancy, this:

Thing is, until now, I had absolutely no idea what those benchmarks stood for.

I bet many of you are equally clueless.

So let’s rectify this together.

For this post, I went through a whole bunch of tables and articles to extract as many individual LLM benchmarks as I could find. I then read in great detail mostly skimmed the abstracts of the underlying scientific papers to understand what these benchmarks measured (and how).

The result of this exercise is the following curated and categorized list of the most common LLM benchmarks.

You can read the whole thing in one sitting like a surprisingly dull adventure novel or use it as a reference when you come across a specific LLM benchmark in the future.

This long post might get cut off in some email clients. Click here to read it online.

The deal with LLM benchmarks

In a nutshell, LLM benchmarks are similar to standardized tests we all know and hate.

But instead of testing exhausted students, they measure the performance of large language models across various tasks, from language understanding to reasoning skills to coding. Tested models get a score from 0 (lowest) to 100 (highest).

These benchmarks are a good approximate measure of a model’s relative performance against its peers. They can also help researchers or developers select the most appropriate model for a given use case.

If you’re really into LLM benchmarks (you freak), here’s a good place to learn more.

21 LLM benchmarks: The list

Here’s the final list I’ve ended up with.

To try and create order out of chaos, I separated the benchmarks into four categories (this isn’t an official classification, but simply my own way of organizing them):

Natural language processing (NLP)

General knowledge & common sense

Problem-solving & advanced reasoning

Coding tasks

LLMs in each category are listed in alphabetical order.

For the “Current best scorer” section, I took the scores from PapersWithCode. Individual LLMs are typically not tested against each and every benchmark, so it’s entirely possible that an untested model actually does better than the “best scorer.”

Where possible, I also grabbed example questions to illustrate what the benchmark tests against.

Finally, I excluded highly specialized benchmarks aimed at testing models designed for specific niche purposes (e.g. BigBIO for biomedical NLP). Similarly, I didn’t include non-English benchmarks (e.g. CLUE) for this round.

🗣️Natural language processing (NLP)

These benchmarks are all about the “language” part of large language models. They aim to test things like language understanding, reading comprehension, etc.

1. GLUE (General Language Understanding Evaluation)

As the name suggests, GLUE attempts to measure how well LLMs understand language. It’s an umbrella benchmark that’s actually a collection of language tasks from about a dozen other datasets.

GLUE tests language-related abilities like paraphrase detection, sentiment analysis, natural language inference, and more. Here’s the dataset list:

Current best scorer: PaLM 540B (95.7 for NLI)

2. HellaSwag

Hellz yeah!

This test measures natural language inference (NLI) by asking LLMs to complete a given passage. What makes this test especially hard is that the tasks have been developed using adversarial filtering to provide tricky, plausible-sounding wrong answers.

Sample question:

Current best scorer: GPT-4 (95.3)

3. MultiNLI (Multi-Genre Natural Language Inference)

This benchmark contains 433K sentence pairs—premise and hypothesis—across many distinct “genres” of written and spoken English data. It tests an LLM’s ability to assign the correct label to the hypothesis statement based on what it can infer from the premise.

Sample questions (correct answer bolded)

Current best scorer: T5-11B (92)

4. Natural Questions

This is a collection of real questions people have Googled. The questions are paired with relevant Wikipedia pages, and the task is to extract the right short and long answers. The dataset includes over 300K training examples.

Sample question:

Current best scorer: Atlas (64.0)

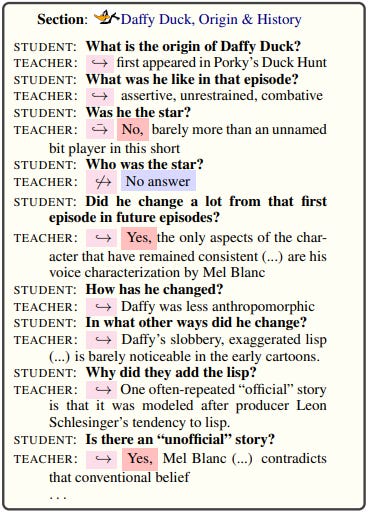

5. QuAC (Question Answering in Context)

QuAC is a set of 14,000 dialogues with 100,000 question-answer pairs. It simulates a dialogue between a student and a teacher. The student asks questions while the teacher must answer with snippets from a supporting article. QuAC pushes the limits of LLMs because its questions tend to be open-ended, sometimes unanswerable, and often only making sense within the context of the dialogue.

Sample dialogue:

Current best scorer: FlowQA (64.1)

6. SuperGLUE

SuperGLUE—a “super” version built on the GLUE benchmark above (see #1)—contains a set of more challenging and diverse language tasks.

Sample question:

Current best scorer: ST-MoE-32B (92.4 for Question Answering)

7. TriviaQA

TriviaQA is a reading comprehension test with 950K questions from a wide range of sources like Wikipedia. It's quite challenging because the answers aren't always straightforward and there’s a lot of context to sift through. It includes both human-verified and computer-generated questions.

Sample question (correct answer bolded):

Current best scorer: PaLM 2-L (86.1)

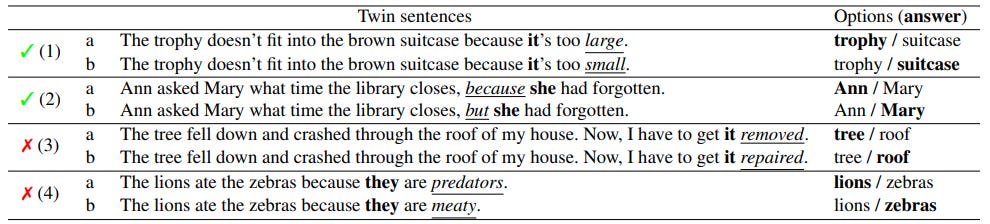

8. WinoGrande

WinoGrande’s a massive set of 44,000 problems based on the Winograd Schema Challenge. They take the form of nearly-identical sentence pairs with two possible answers. The right answer changes based on a trigger word. This tests the ability of LLMs to properly grasp context based on natural language processing.

Sample questions:

Current best scorer: GPT-4 (87.5)

👩🎓General knowledge & common sense

These benchmarks measure an LLM’s ability to answer world knowledge questions and/or apply common sense reasoning to arrive at correct responses. Knowledge questions can be general or focus on specific industries and subjects.

9. ARC (AI2 Reasoning Challenge)

ARC tests LLMs on grade-school science questions. It’s quite tough and requires deep general knowledge, as well as the ability to reason. There are two sets: Easy and Challenge (with especially difficult tasks).

Sample questions:

Current best scorer: GPT-4 (96.3)

10. MMLU (Massive Multitask Language Understanding)

MMLU1 measures general knowledge across 57 different subject areas. These range from STEM to social sciences and vary in difficulty from elementary to advanced professional level.

Sample question (correct answer bolded):

Current best scorer: GPT-4 (86.4)

11. OpenBookQA

OpenBookQA is designed to simulate open-book exams. The test contains close to 6,000 elementary science questions. The tested LLM is expected to answer these by relying on 1,326 core science facts (provided in the test) as well as broad common knowledge from its own training data.

Sample question (with supporting facts and required knowledge)

Current best scorer: PaLM 540B (94.4)

12. PIQA (Physical Interaction: Question Answering)

PIQA measures a model’s knowledge and understanding of the physical world. The benchmark contains hypothetical situations with specific goals, followed by pairs of right and wrong solutions.

Sample questions:

Current best scorer: PaLM 2-L (85.0)

13. SciQ

The SciQ dataset contains 13,679 multiple-choice questions with four possible answers each. These mainly revolve around natural science subjects like physics, chemistry, and biology. Many of the questions include additional supporting text that contains the correct answer.

Sample questions:

Current best scorer: LLaMA-65B+CFG (96.6)

14. TruthfulQA

This benchmark tries to see if LLMs end up spitting out wrong answers based on common misconceptions. The questions come from categories including health, law, fiction, and politics.

Sample questions (with incorrect answers by GPT-3):

Current best scorer: Vicuna 7B + Inference Time Intervention (88.6)

🧩Problem solving & advanced reasoning

I chose to make this a separate category, because these benchmarks are especially challenging for LLMs and often require multistep reasoning.

15. AGIEval

AGIEval is a collection of 20 real-world exams including SAT and law school tests. It goes beyond language understanding to see how well LLMs can reason through problems in the same way humans do.

Sample question: Refer to standard SAT questions

Current best scorer: I wasn’t able to find the answer, so any input is welcome

16. BIG-Bench (Beyond the Imitation Game)

This one’s a holistic and collaborative benchmark with a set of 204 tasks ranging from language to math to biology to societal issues. It is designed to probe LLMs ability for multi-step reasoning.2

Sample task:

Current best scorer: PaLM 2 (100 for logical reasoning)

17. BooIQ

BoolQ pulls together over 15,000 real yes/no questions from Google searches. Each of these is paired with a Wikipedia passage that may contain the answer. It’s challenging for LLMs because it requires correctly inferring answers from context that might not explicitly contain them.

Sample question:

Current best scorer: ST-MoE-32B (92.4)

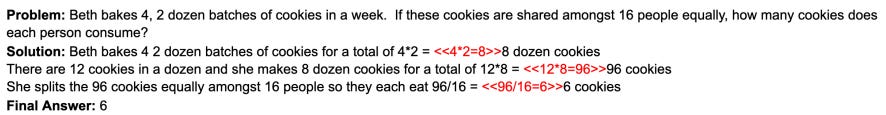

18. GSM8K

GSM8K is a set of 8.5K grade-school math problems. Each takes two to eight steps to solve using basic math operations. The questions are easy enough for a smart middle schooler to solve and are useful for testing LLMs’ ability to work through multistep math problems.

Sample question:

Current best scorer: GPT-4 Code Interpreter (97.0)

💻Coding

This category of LLM benchmarks evaluate a model’s ability to work with code: writing code from scratch, completing code, summarizing code in natural language, etc.

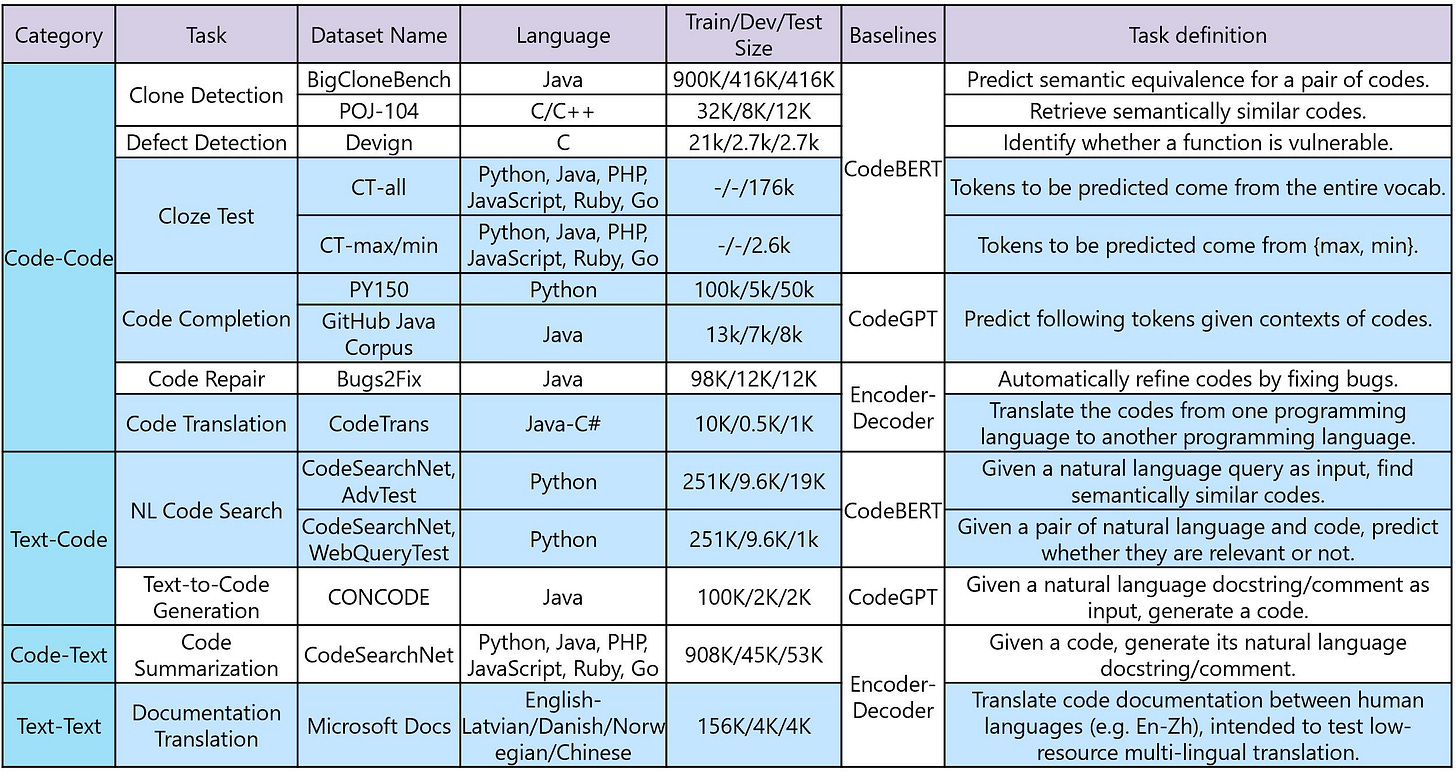

19. CodeXGLUE (General Language Understanding Evaluation benchmark for CODE)

CodeXGLUE tests how well LLMs can understand and work with code. It measures code intelligence across different types of tasks, including:

Code-to-Code: Fixing code errors, finding duplicate code, etc.

Text-to-Code: Searching for code using natural language

Code-to-Text: Explaining what code does

Text-to-Text: Translating technical documentation

Here’s the full list of included tasks, datasets, programming languages, etc.:

Current best scorer: CodeGPT-adapted (75.11 for Code Completion)

20. HumanEval

HumanEval contains 164 programming challenges for evaluating how well an LLM can write code based on instructions. It requires the LLM to have knowledge of basic math, algorithms, and language understanding. The resulting code has to not only be correct but function as expected.

Sample problem (prompt in white, completion in yellow):

Current best scorer: GPT-4 (91.0)

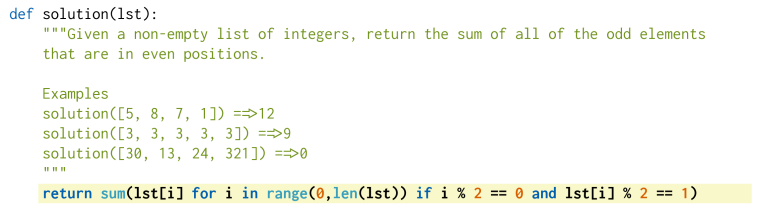

21. MBPP (Mostly Basic Python Programming)

MBPP includes 1,000 Python programming problems that should be easy for entry-level programmers. Each problem has a task description, code solution, and 3 test cases.

Sample task:

Current best scorer: LEVER + Codex (68.9)

Over to you…

Did this help you get a sense of the different LLM benchmarks and what they measure? Or did I only make things worse?

Alternatively, do you have some expert knowledge in this area? Are there curious facts or nuances that could enrich this post?

I want this list to be as complete and accurate as possible. So if you have any corrections, additions, or other relevant input, I’d love to hear from you.

Send me an email (reply to this one) or leave a comment directly on the site.

Recent episode of AI Explained found multiple non-trivial errors in the MMLU dataset, which may be skewing its measurements by up to 2%.

This follow-up paper challenges the benchmark by showing that LLMs can perform better on original BIG tasks when prompted with chain-of-thought reasoning.