Sunday Rundown #123: Multimedia Mayhem & Karen Santa

Sunday Bonus #83: Opal app that extracts insights from recorded meetings.

Happy Sunday, friends!

Welcome back to the weekly look at generative AI that covers the following:

Sunday Rundown (free): this week’s AI news + a fun AI fail.

Sunday Bonus (paid): an exclusive segment for my paid subscribers.

In case you missed it, here’s this week’s Thursday deep dive:

Note: If you’re consistently missing out on my emails, remember to check your “Promotions” tab and mark whytryai@substack.com as a “Safe Sender.”

As we all head into the holidays, I’m winding down for the year. This is my last Sunday Rundown of 2025.

Thank you for following along, and I’ll make sure to keep you up to speed in 2026.

Merry Christmas and a Happy New Year!

Let’s get to it.

🗞️ AI news

Here are this week’s AI developments.

You’d think things would be slowing down, but no—this is perhaps the most launch-packed week we’ve had this year.

👩💻 AI releases

New stuff you can try right now:

Adobe news:

Firefly has been upgraded with a precise prompt-based editor, camera controls, new partner models, plus free credits until Jan 15. (Try here.)

Generate Presentation is a new feature that turns ideas or docs into polished slide decks with customizable templates, outlines, and easy edits.

Alibaba news:

Qwen Code v0.5.0 comes with VS Code integration, a TypeScript SDK, and broader support for OpenAI-compatible reasoning models.

Qwen-Image-Layered is an open-source diffusion model that breaks images down into editable RGBA layers so you can tweak them independently.

Wan 2.6 is the newest video model with native audio, better lip sync, camera controls, and multi-image reference. (Try here.)

Black Forest Labs launched FLUX.2 [max], its most powerful image generation and editing model, capable of handling up to 10 reference images. (Try here.)

Google news:

Deep Research can now generate Visual Reports that supplement text with charts, images, and structured layouts. (Google AI Ultra accounts only.)

FunctionGemma is a function-calling model designed to reliably turn natural language into structured calls for tools and APIs.

Gemini 2.5 Flash Native Audio is now better at conversations and following complex instructions, and comes with live speech-to-speech translation.

Gemini 3 Flash is a super-fast, capable, cost-effective model that’s now the default in the Gemini app, AI Mode in Search, and available via API.

Google Translate got Gemini-powered upgrades and now offers more natural text translations plus language practice tools.

NotebookLM can now turn messy notes and sources into structured, exportable Data Tables to simplify research and analysis.

T5Gemma 2 is a next-gen encoder-decoder model family with long context plus multimodal and multilingual capabilities.

Kling AI news:

VIDEO 2.6 Motion Control gives creators precise control over character movements using reference images and video clips.

VIDEO 2.6 Voice Control lets you match custom voices to characters for more realistic, multi-language dialogue.

Luma AI’s new Ray3 Modify lets you edit anything in a video, including character swaps, relighting, direct edits using keyframes, and more. (Try here.)

Manus released 1.6 Max, a smarter agent with higher task success, better capabilities, mobile app support, and a visual design editor. (Try here.)

Meta introduced SAM Audio that lets you isolate any sound in a video using text, visual, or time-based prompts. (Try here.)

Microsoft released TRELLIS.2-4B, a 3D generative model that turns a single image into textured assets in high resolution. (Try on Hugging Face.)

Mistral AI released OCR 3 that can accurately extract structured text from complex scanned documents, forms, and handwriting.

NVIDIA open-sourced Nemotron 3, a family of models designed for efficient, long-horizon agentic AI tasks with full access to weights, data, and tools.

OpenAI news:

Branched Chats are now also available on iOS and Android.

GPT‑Image-1.5 is a top-tier image model with better prompt following and text rendering, available in the API and a dedicated ChatGPT Images space.

OpenAI released GPT-5.2-Codex, its best agentic coding model thus far with better long-horizon planning, refactoring, and cybersecurity skills.

Xiaomi open-sourced MiMo-V2-Flash, an ultra-fast foundation model designed for reasoning, coding, and long-running agent workflows. (Try here.)

Zoom rolled out AI Companion 3.0 that turns online meetings into actionable summaries, briefs, and automated follow-ups across Zoom, Drive, and Slack.

🔬 AI research

Cool stuff you might get to try one day:

ByteDance Seed introduced Seedance 1.5 pro that generates video with native synced audio plus multilingual lip sync and cinematic camera controls.

Google has an experimental CC agent that connects to Gmail, Drive, and Calendar and emails you tailored daily briefings. (Join the waitlist.)

📖 AI resources

Helpful AI tools and stuff that teaches you about AI:

“New Gemini + The ‘Proto-AGI’” [VIDEO] - a great episode of AI Explained.

🔀 AI random

Other notable AI stories of the week:

OpenAI now lets developers submit ChatGPT apps for review and may feature them in a new in-app directory.

xAI launched Grok Voice Agent API that lets developers build ultrafast, multilingual voice agents with access to tools and real-time search.

🤦♂️ AI fail of the week

It’s Xmas, so here’s a win for a change. Santa wants to speak to the manager.

Send me your AI fail for a chance to be featured in an upcoming Sunday Rundown.

💰 Sunday Bonus #83: Get one-click insights from any video meeting

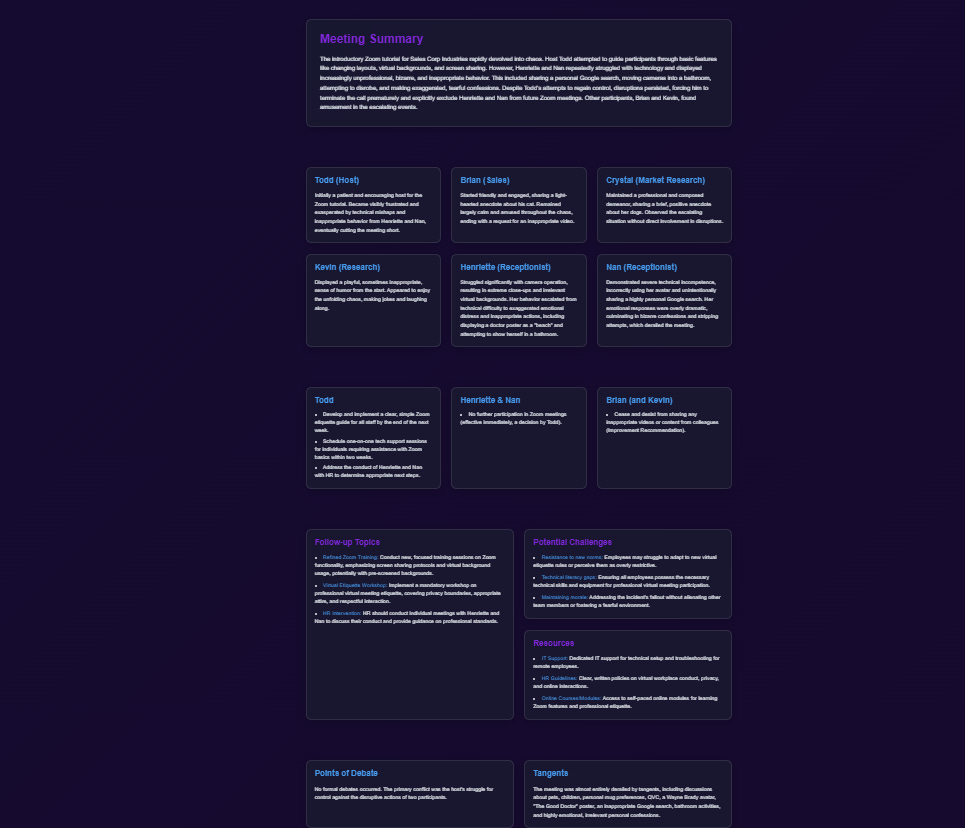

This week, I turned one of my earliest Sunday Bonus guides into a handy Opal app called Meeting Mind.

Simply upload a recorded meeting (or drop in a YouTube link), and the app automatically runs it through every analysis step from the guide, plus a few new ones I’ve added.

Meeting Mind goes beyond basic meeting minutes and action items. It also looks at intangibles like group dynamics, conversation flow, points of friction, tangents, etc..

The result is a clean, structured page with takeaways and actionable recommendations for future meetings.