Sunday Rundown #100: Conference Craze & Tralalero Tralala

Sunday Bonus #60: Swipe file with 40+ Claude 4 use cases.

Happy Sunday, friends!

Welcome back to the weekly look at generative AI that covers the following:

Sunday Rundown (free): this week’s AI news + a fun AI fail.

Sunday Bonus (paid): an exclusive segment for my paid subscribers.

Let’s get to it.

🗞️ AI news

Here are the biggest developments of the past two weeks.

We had three major conferences last week:

So we’ve got a lot of stuff to catch up on!

👩💻 AI releases

New stuff you can try right now:

Amazon launched AI-powered search in Amazon Music to improve artist and song discovery. (In beta for Amazon Music Unlimited subscribers in the US.)

Anthropic news:

The new Claude 4 family comes with long-term memory, tool use, and better coding abilities. (Try Claude 4 Sonnet for free.)

Voice mode is out in beta, letting you finally talk to Claude in real time.

Black Forest Labs launched FLUX.1 Kontext, a multimodal model capable of targeted, in-context image editing based on text or image input.

DeepSeek released an updated R1-0528 model with better reasoning, fewer hallucinations, JSON function calling, and more. (Try for free.)

ElevenLabs launched Multimodal Conversational AI that processes speech and text inputs at the same time, allowing for more flexible and efficient interactions.

Genspark now gives unlimited access to AI Chat for Plus and Pro plans, so users can chat with nine top-tier models on one platform.

GitHub launched a new agent for GitHub Copilot that can autonomously fix bugs, add features, refactor code, and more.

Google announced major updates during the Google I/O 2025 Conference:

Flow is a new film-making platform powered by Google’s state-of-the-art Veo 3 video model (see below).

Gemini 2.5 Flash got better, and Gemini 2.5 Pro got a “Deep Think” mode for complex reasoning. Both models now also come with native audio output.

Gemma 3n is a mobile-first multimodal language model that can run locally on just 2–3GB RAM.

Imagen 4 is a top-tier text-to-image model with high-quality visuals, sharp details, and better-spelled text. (Try it for free on gemini.google.com)

Jules is an autonomous coding agent that fixes bugs, writes tests, and builds features directly in your GitHub workflow.

Lyria 2 (music model) is now available in more places to more creators, including as a Lyria RealTime version for live jamming in Google AI Studio.

NotebookLM is now available as a mobile app that brings many of its best features directly to people’s phones.

Photos now features a redesigned editor that uses AI to suggest and make tweaks to your pictures.

Search is getting AI-powered improvements across Google products, including deeper answers, live search, smart shopping, and more.

Veo 3 is a paradigm-shifting AI video model that natively incorporates sound, speech, and music into video clips from simple text prompts.

Workspace is also getting AI-powered upgrades like smart email replies, automatic speech translation in Google Meet, and more.

Hume AI rolled out EVI 3, a voice model that outperforms GPT-4o in empathy, expressiveness, response speed, and other parameters in blind testing.

Kling launched an upgraded 2.1 family of video models with 1080p output and better prompt adherence.

Manus now has a Slides tool that builds entire presentations from a simple prompt and lets you edit them directly on the fly.

Microsoft also had many announcements during Microsoft Build 2025:

Copilot Wave 2 brings smarter search, specialized agents, a new Copilot Create experience, and much more.

Notepad, Paint, and Snipping Tool are getting AI-powered enhancements like custom stickers, smart screenshots, and more.

Windows is getting dozens of developer-focused AI improvements, including tools for local inference, model fine-tuning, agent integration, and more.

NVIDIA released Llama Nemotron Nano 4B, an open reasoning model for edge devices. See also: CEO Jensen Huang’s Keynote at Computex 2025:

Perplexity introduced a new Pro feature called Labs that helps you build reports, dashboards, and mini apps in a single workspace.

Salesforce launched Agentforce, bringing agentic AI teammates to applications like Slack to handle support, onboarding, CRM updates, and more.

Stability AI upgraded Stable Video 4D to version 2.0, which can generate sharp multi-angle 4D video from a single input video.

Tencent open-sourced HunyuanVideo-Avatar, which can animate photos from speech or audio input. (Much like HeyGen Avatar IV)

xAI gave Grok the ability to create charts from live data on the fly.

🔬 AI research

Cool stuff you might get to try one day:

Google also has a lot of stuff in the pipeline:

Gemini Diffusion is an experimental model that generates text from noise significantly faster than the fastest conventional models. (Waitlist here.)

Google Beam is an AI-powered platform that transforms regular 2D video streams into lifelike 3D visual experiences.

LightLab is a diffusion-based tool that lets users retroactively adjust lighting sources and conditions in existing images with realistic results.

NotebookLM will be getting a Video Overviews feature that transforms information into visual slide decks with voiceover:

SignGemma is a multilingual model that can translate live sign language into English text.

Opera teased Neon, an AI-powered browser built for the "agentic web" that can independently browse and take action on your behalf. (Sign up for the waitlist.)

🔀 AI random

Other notable AI stories of the week:

Google launched a SynthID Detector portal that can effectively identify content made with Google’s generative AI across text, audio, images, and video.

🤦♂️ AI fail of the week

The two separate character references I gave to Google Whisk...

…the Italian brainrot Whisk cobbled together:

Send me your AI fail for a chance to be featured in an upcoming Sunday Rundown.

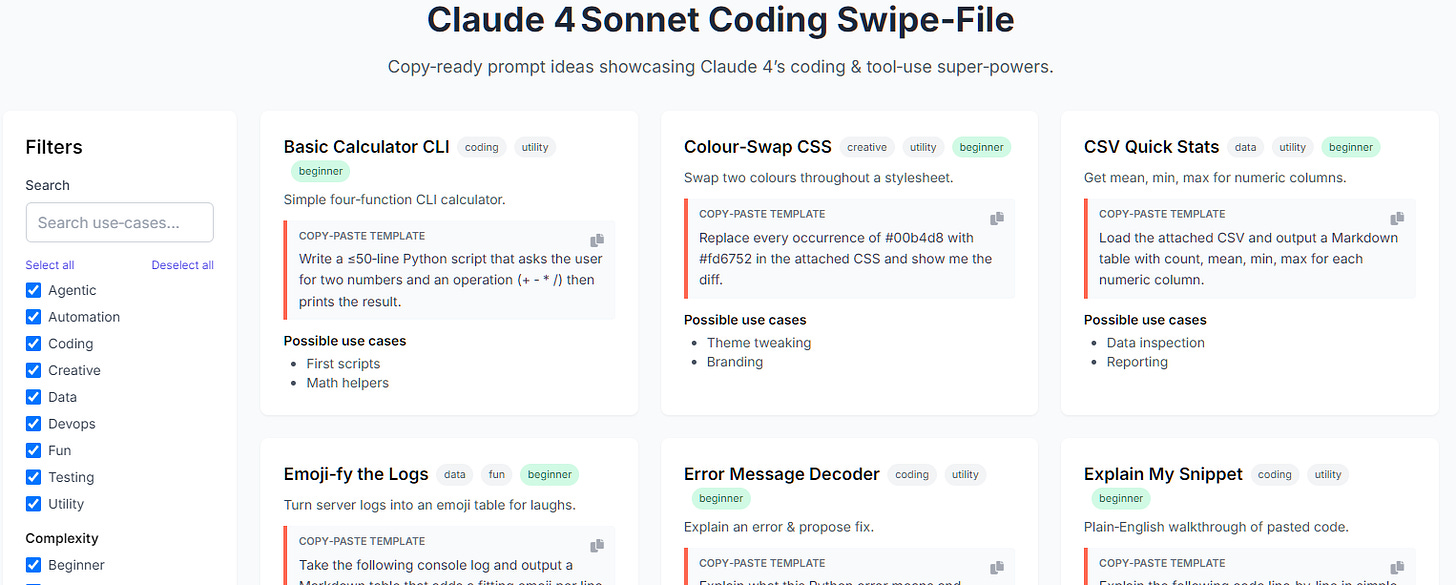

💰 Sunday Bonus #60: 40+ use cases for Claude 4 (swipe file)

My two previous swipe files were quite popular:

Now that the new Claude 4 is topping the WebDev arena leaderboard and other coding benchmarks, I figured it’d help to make a swipe file of use cases for its best-in-class coding abilities. (Remember, the smaller Claude 4 Sonnet is free for everyone.)

I’m not a pro coder myself, so I partnered with OpenAI o3 to identify the use cases, categorize them, create examples, and put the swipe file together.

We ended up with over 42 use cases in total:

You can filter by category, search by keyword, and one-click copy starter prompts to test them with Claude 4.

I hope this gives you a bit of inspiration for your own needs!