3 Ways to Make Movie Clips with Sora 2

...and what Sora 2 might tell us about the future of AI filmmaking.

For several years now, I’ve been hearing variations of the following:

”In the future, AI will be able to make a full-length movie on demand.”

This view was expressed by some big Hollywood directors…

…showed up in numerous publications…

…and was widely discussed on Reddit and other forums:

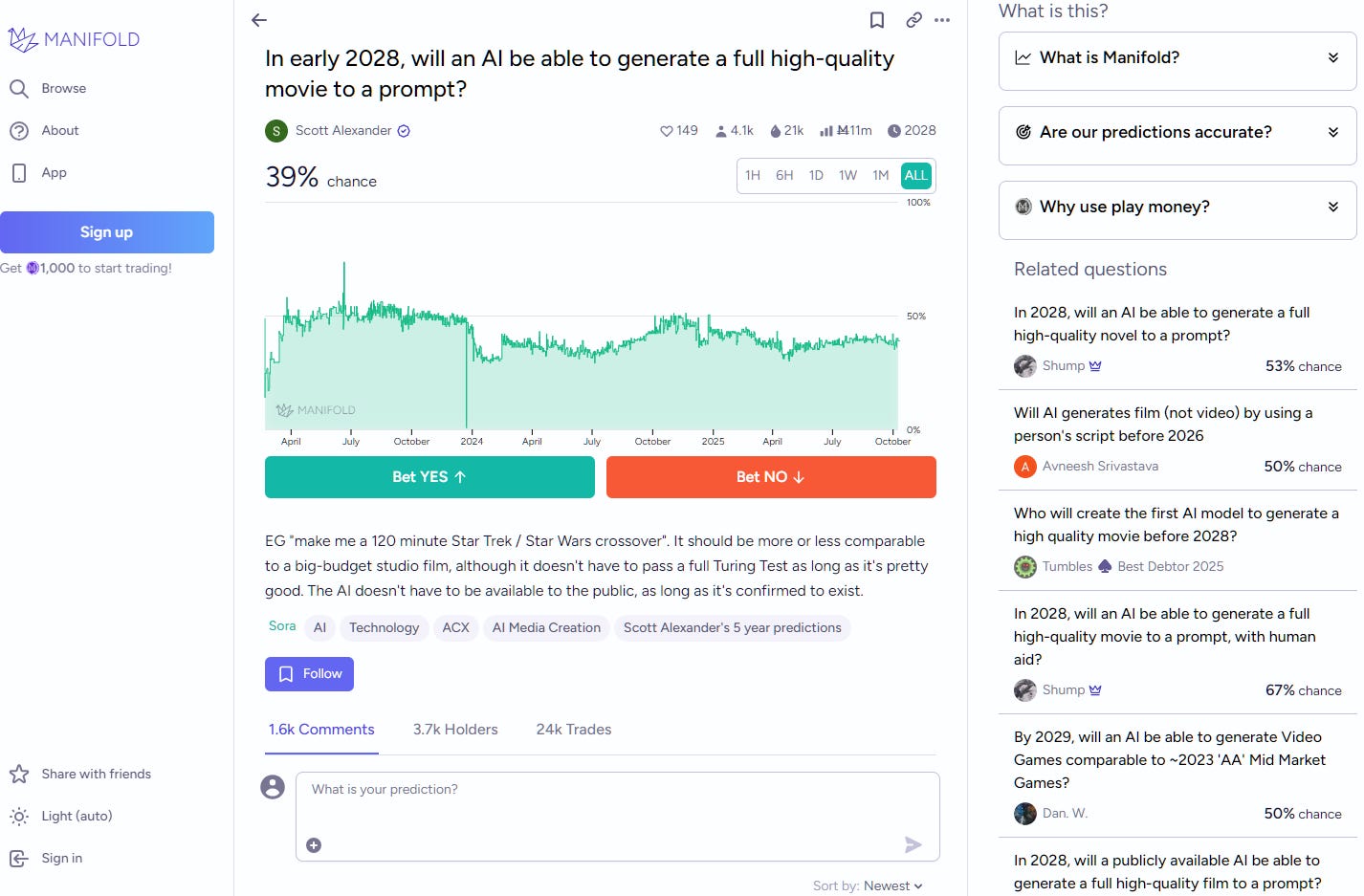

Hell, we even have prediction markets playing the game:

I’ll be honest: Despite closely following AI progress for over three years, the idea of AI making an entire movie from scratch sounded too far-fetched.

And that’s coming from someone who has personally tested dozens of different video models and is well aware of what AI video is capable of.

But with the recent launch of Sora 2, the concept of fully AI-generated movies suddenly appears less crazy.

Let’s see why.

AI movies: Then and now

Just last year, I showed you how to make a movie teaser using AI:

At the time, it was quite an involved process, requiring many separate steps and different tools:

Brainstorming & script (large language models)

Starting images for video clips (image generators)

Individual video clips (video models)

Soundtrack (AI music models)

Sound effects (AI audio and sound effects tools)

Editing (video editing tools)

Today, all of this can be done with a short one-line Sora 2 prompt:

Teaser trailer for [BLANK]

Yup, that’s it!

For instance, when I asked for nothing more than…

Prompt: Teaser trailer for a horror movie inspired by children’s shows

Sora 2 gave me this:

When I tried…

Prompt: Teaser trailer for “A Nightmare on Elm Street” as a cheesy romantic comedy

Sora 2 came back with this:

In both cases, I provided only a vague one-line direction, and Sora 2 ran with it.

This is possible because—unlike most prior video models1—Sora 2 natively incorporates three new elements:

Reasoning: Sora 2 understands the full context of my request and has enough world knowledge to blend the concepts, write a script, and stitch everything into a semi-coherent narrative.

Audio generation: Sora 2 generates its own music, dialogue, and sound effects.

Character consistency: Note how the creepy doll in the first trailer and the woman in the second one remain the same from scene to scene. Sora 2 can pull this off because the characters are generated within the same pass and are therefore self-consistent.

To me, Sora’s ability to make these self-contained trailers is a preview of what’s possible and a microcosm of what AI filmmaking could eventually look like.

After all, the difference between a 9-second teaser trailer and a feature-length movie is “just” one-and-a-half hours of runtime.

But before we reach that utopian/dystopian future (pick your side), let me show you three different ways you can prompt Sora 2 to tell stories. We’ll go from the most hands-off approach to the one where you’re more involved in setting the narrative and visual direction.

As a callback to my last year’s clunky teaser trailer, we’ll have Sora 2 work on the same storyline. This will also give us a good comparison benchmark. (Spoiler alert: I lose.)

Method #1: Context prompting

The two trailers above are both examples of context prompting.

With context prompting, you share your general idea with Sora 2, but you don’t describe the scenes themselves in detail.

You roll the dice and see how Sora 2 interprets your vision.

This isn’t to say that context prompting never requires any effort.

Sure, the context can be just a vague one-line idea, but it can also be a longer logline or pages of world-building, character development, and more.

The key here is that you’re telling Sora 2 what to visualize, but not how to do it.

To demonstrate, I revisited my Claude chat for the “Wake” movie trailer and asked Claude to distill the concept into a trailer synopsis.

I then used Claude’s output “as is” without any scene directions or instructions about which story beats to highlight.

Here’s exactly what I gave Sora 2:

Prompt: In a world where humanity has achieved symbiosis with technology, organic life and machines have merged into a harmonious ecosystem. This fusion has created a society of augmented humans, cybernetic animals, and living architecture. However, beneath the surface of this seeming utopia lies a hidden truth: the loss of true human consciousness. When a mysterious pulse threatens to unravel this delicate balance, it may offer humanity its last chance to reclaim its essence and “wake” from its technological slumber.

Here’s Sora’s very first take:

I find it fascinating that Sora 2 overlapped quite a bit with Claude in how they chose to illustrate certain concepts. For instance, the cybernetic deer was not in my prompt, but Sora 2 arrived at this exact visual, just as Claude did during later brainstorming stages.2

Sora distilled our top-level synopsis into an ultra-short teaser that is arguably more effective in setting the scene than my one-and-a-half-minute-long mishmash.

Context prompting is great for early concept stages, when you want to see your vision come to life, but don’t have a strong opinion about the exact story beats.

But what if you already wrote the script? Well...

Method #2: Script prompting (scene-by-scene)

When I shared my “5S Framework” for prompting video models, I mainly had short, single scenes in mind.

But Sora 2 can handle more than that.

While testing character consistency, I tried the following sequence of scenes as a prompt:

Prompt:

SCENE #1: Woman waking up

SCENE #2: The same woman happily brushing teeth in front of a bathroom mirror

SCENE #3: The same woman having cereal for breakfast by a kitchen table, smiling

SCENE #4. The same woman holding a bloody knife in a dark alley at night with a horrified expression

SCENE #5: Fade to black

Sora nailed it on its first try:

That’s the essence of script prompting.

And yes, you can prompt for specifics like the soundtrack, scene details, and even spoken dialogue. The output isn’t always flawless, but you can usually get something workable with enough re-rolls.

To test this concept with our trailer, I asked Claude to create a 4-5 scene teaser script for Sora 2. Claude gave me this, which I immediately passed on to Sora:

Prompt:

SCENE 1 (2 seconds): Rapid montage of techno-organic world - tree with glowing circuits, person with neon veins beneath skin, cybernetic deer leaping, futuristic city with bioluminescent buildings. Ambient electronic hum with organic undertones. SCENE 2 (1.5 seconds): Extreme close-up of half-organic, half-mechanical heart beating in perfect rhythm. Synchronized mechanical and organic sounds.

SCENE 3 (2 seconds): Blinding white EMP flash across cityscape. All lights die. Heart sputters and flatlines with electronic beep. Sudden silence.

SCENE 4 (1.5 seconds): Extreme close-up: human eyes snap open, gasping breath. Purely organic, no technology visible.

SCENE 5 (1 second): Black screen. Title “WAKE” fades in with soft glow. Quiet, resonant sound.

I rolled this prompt about a dozen times, with generally similar but varying outcomes:

Here’s the very first one:

Not perfect, but quite close, right?

Again, this is something that a year ago took me one day of prompting multiple AI tools and working with editing software to cobble together…done by Sora from one text prompt in one minute.

I used a simplistic scene-by-scene breakdown, but you can also feed Sora properly formatted script pages, and it’ll usually do a passable job.

So if you have a more fleshed-out idea of your scenes, give script prompting a go.

Method #3: Storyboard prompting (visuals)

Now, what if you weren’t content with just describing the story beats?

What if you also wanted to dictate the visuals?

That’s possible! Kinda.

Sora lets you upload images along with your prompt. Individual images can be used as starting frames to establish the setting.

But you can also upload entire storyboard grids.

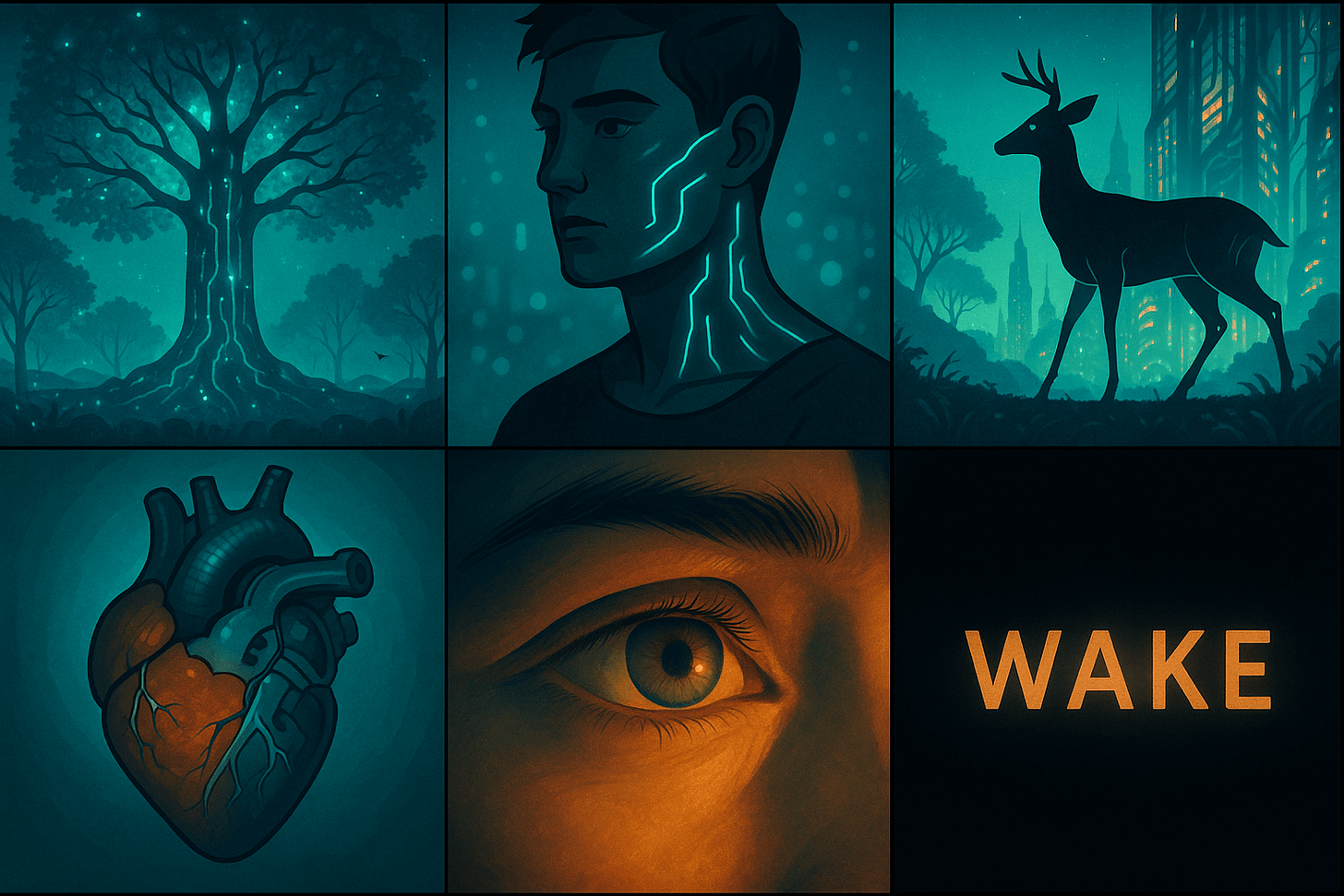

First, create your storyboard. For our demo, I fed the entire Wake synopsis and scene script from Claude to ChatGPT and asked it to generate the storyboard grid:

Prompt: Make a horizontal image with a grid of 3x2 squares, covering the 6 main story beats of the following movie teaser: [CONTEXT FROM CLAUDE]

Here’s the result:

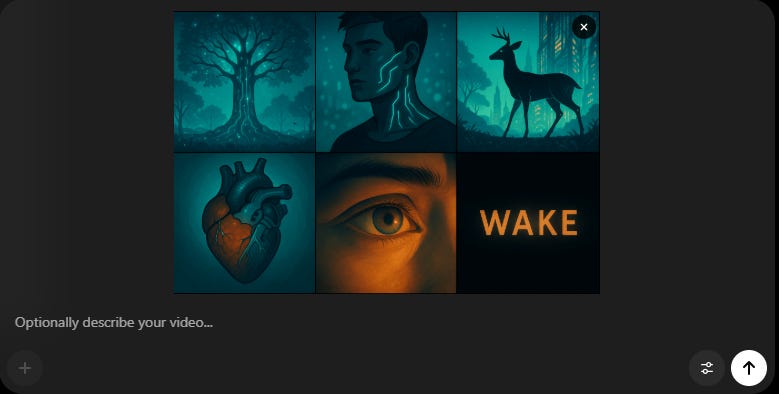

I then submitted that image to Sora 2 without any text prompt:

Here’s the result:

Without any context, Sora 2 improvises the storyline, but it mostly follows the image sequence and faithfully mimics the look.

This way, storyboard prompting lets you establish the scene flow and the visual style in one go.

The best part? You can combine the storyboard with the other two methods by adding background details about the movie and/or scene-by-scene descriptions as your text prompt, as long as they align with the uploaded image grid.

A few caveats:

Content restrictions: Sora will typically reject any gory or violent images, photos of real people, and other visuals that go against the community guidelines. If you want to include people in your storyboard, make them look stylized instead of realistic.

Grid limitations: Aim for no more than 8 separate images in the uploaded storyboard. In my experience, Sora can’t accurately and consistently follow more than that without ignoring some images or getting confused.

Use dialogue sparingly: You only have 9 seconds, so you won’t be able to squeeze in a Shakespearean soliloquy just yet. Also, trying to prompt multiple speakers is a gamble, as Sora may assign speaking lines to the wrong character.

Now go out there and make some mini movies!

What’s next?

“This is the worst these AI models will ever be.”

While I’m tired of this overused phrase, it happens to be true.

Sora 2, Veo 3, and other video models will continue getting better.

As such, we’re gradually moving toward a future where AI is a natural part of the filmmaking process.

I see two paths forward here, which aren’t mutually exclusive.

Path one: Models like Sora 2 become capable of making longer and longer clips, until one day they can output an entire movie in one go. If we treat Sora 2 and Veo 3 as proof-of-concept models, there’s little reason to expect they should max out at around 10 seconds.

Path two: We get increasingly capable agentic studio tools that can autonomously write scripts, create storyboards, and pull together outputs from different third-party models into a coherent whole. LTX Studio is a great example of this. What’s cool about such tools is that they unlock the “black box” and let human filmmakers tweak and edit any scene or script element manually, retaining more control.

However it plays out, Sora 2 is clearly just a prototype on a road to an AI-assisted future of film.

Whether that’s a future we will enjoy remains to be seen.

🫵 Over to you…

What do you think of these prompting approaches? Which of them worked best for you? Have you come across other Sora 2 prompting tips? Feel free to share your tips or Sora 2 creations.

Leave a comment or drop me a line at whytryai@substack.com.

Thanks for reading!

If you enjoy my writing, here’s how you can help:

❤️Like this post if it resonates with you.

🔄Share it to help others discover this newsletter.

🗣️Comment below—I love hearing your opinions.

Why Try AI is a passion project, and I’m grateful to those who help keep it going. If you’d like to support my work and unlock cool perks, consider a paid subscription:

With the notable exception of Veo 3.

There’s room for exploring how model training data and homogenization of concepts play into the picture here, but that’s a story for another post.